“From Co-Pilot to Co-Founder”

Table of Contents

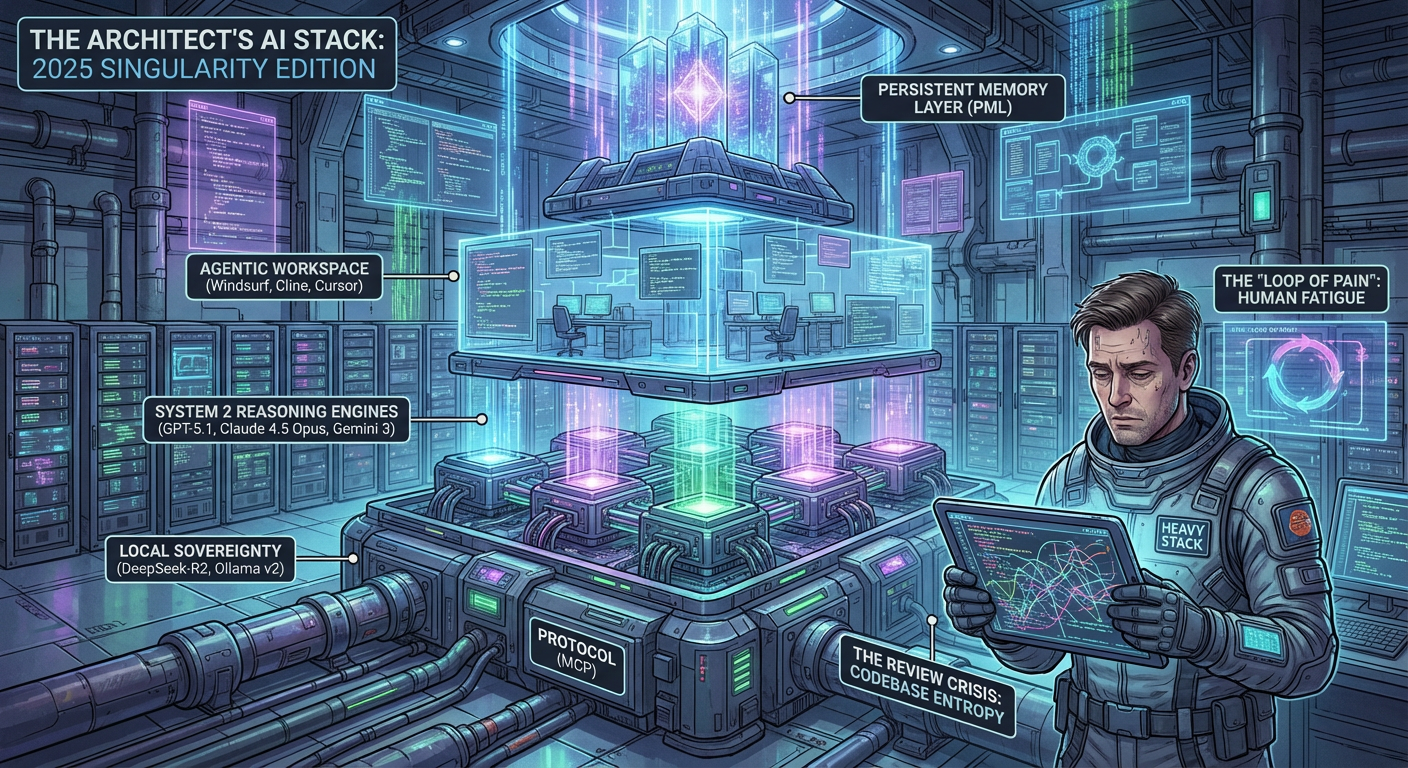

- The Ontology of 2025: System 2 & The Death of “Chat”

- The Frontier Engines: A Technical Deep Dive

- GPT-5.1 & The Persistent Memory Layer (PML)

- Claude 4.5 Opus: The Deep Thinker

- Gemini 3 Flash: The Real-Time Agent

- The Nervous System: Model Context Protocol (MCP)

- Architecture & Security

- The Open Source Server Ecosystem

- The Agentic Workspace: IDEs vs. IADs

- Windsurf & Cascades

- Cursor & Composer V2

- Cline: The Open Orchestrator

- Local Sovereignty: The Private Stack

- DeepSeek-R2 & Distillation

- The “Model OS”: Ollama v2

- Operationalizing Intelligence: Testing & CI/CD

- Behavioral Verification (Qodo)

- The “Agent-in-the-Loop” Pipeline

1.0 The Ontology of 2025: System 2 & The Death of “Chat”

The interface of 2024—the “Chatbot”—is dead. It was a skeuomorphic bridge, a transitional phase where we treated AI like a very smart person we had to text.

In late 2025, the interface is Context. We no longer “chat” with AI; we inhabit a workspace where the AI is an omnipresent, stateful layer of the operating system. The defining shift of this year is the move from Probabilistic Token Generation (System 1) to Reasoning-First Planning (System 2).

1.1 The Collapse of the “Prompt Engineer”

“Prompt Engineering” has been replaced by “Context Engineering.” You don’t trick the model into being smart anymore; the models (GPT-5, Opus 4.5) are now smarter than the average junior engineer at raw logic. The Architect’s job is now strictly Resource Management:

- Which model do I route this task to?

- How much context does it need?

- What are the permission boundaries?

2.0 The Frontier Engines: A Technical Deep Dive

The “Big Three” have diverged into specific evolutionary niches. We no longer ask “which is best?” but “which fits the specialized workload?“

2.1 GPT-5.1 & The Persistent Memory Layer (PML)

OpenAI (Released: Aug 2025 / Preview: Dec 2025)

GPT-5 represents the “General Intelligence” peak. But its defining feature in the 5.1 Preview is PML (Persistent Memory Layer).

2.1.1 The Technical Architecture of PML

PML is not RAG (Retrieval Augmented Generation). RAG is a search engine; it finds documents. PML is a State Machine.

- Vector vs. Graph: Traditional RAG uses vector embeddings (fuzzy matching). PML uses a Knowledge Graph. When you define a variable in a conversation, GPT-5.1 maps it as a node in a project-specific graph.

- The “Write” Operation: When a user establishes a constraint (e.g., “All dates must be UTC”), the model performs a

MEM_WRITEoperation. This is atomic and persistent. - The “Enforcement” Layer: Before generating any future code, the model traverses the graph. If a generated token violates a constraint node (e.g., generating

new Date()instead ofmoment.utc()), the logits are suppressed before the token is emitted.

TIPThis allows for “Zero-Shot Onboarding.” A new chat session doesn’t start from zero; it starts from the exact state of the project’s Knowledge Graph.

2.2 Claude 4.5 Opus: The Deep Thinker

Anthropic (Released: Nov 2025)

If GPT-5 is the CEO, Claude 4.5 Opus is the Principal Researcher. It is slow, expensive, and utterly brilliant.

2.2.1 The 2-Million Token “Perfect Recall”

Claude 4.5 achieves what was previously impossible: Linear Attention at Scale. It can hold a 2,000,000 token context window (roughly 15,000 files of code) with 99.93% Needle-in-a-Haystack recall.

- Use Case: The “Big Refactor” You can feed Claude 4.5 an entire legacy Java monolith and ask: “Identify every instance of the Singleton pattern that causes race conditions, and rewrite them to use Dependency Injection.” It will think for 45 seconds (System 2 pause) and then output a plan that touches 400 files without hallucinating a single import path.

2.3 Gemini 3 Flash: The Real-Time Agent

Google (Released: Dec 2025)

Gemini 3 Flash changed the economics of AI. It is Multimodal Native and Latency Optimized.

- The “0.2s” Barrier: Gemini 3 Flash can ingest a screenshot of a UI error, OCR the text, parse the logs, and suggest a fix in under 200 milliseconds.

- Agentic Loops: Because it is so cheap ($0.10 / 1M tokens), we now use it for “Brute Force Reasoning.” We can spawn 50 parallel agents to try 50 different bug fixes simultaneously, run the tests, and only present the user with the one that passed.

3.0 The Nervous System: Model Context Protocol (MCP)

Standardized by Anthropic & Linux Foundation (2025)

Before 2025, connecting AI to data meant writing brittle API glue code. MCP is the “USB-C for Intelligence.” It creates a standard way for any model to talk to any data source.

3.1 How MCP Works (The “Client-Host-Server” Model)

- MCP Host: The application running the AI (e.g., Claude Desktop, Cursor, Cline).

- MCP Server: A lightweight, sandboxed process that exposes data.

- MCP Client: The AI model itself.

// The AI wants to check a database.// It sends a JSON-RPC message to the Host.{ "jsonrpc": "2.0", "method": "callTool", "params": { "name": "postgres_query", "arguments": { "query": "SELECT * FROM users WHERE status = 'active' LIMIT 5" } }}// The Host validates permissions ("Does this AI have DB access?").// The Host forwards to the Postgres MCP Server.// The Server executes and returns the JSON result.3.2 The Open Source Server Ecosystem

The community has exploded with free MCP servers. You can run these locally today.

| Server | Capability | Command |

|---|---|---|

| @modelcontextprotocol/server-postgres | Read-only SQL schema inspection & querying. | docker run mcp/postgres |

| @modelcontextprotocol/server-github | Issue tracking, PR reviews, file search. | npx -y @mcp/server-github |

| @modelcontextprotocol/server-filesystem | Safe local file access (sandboxed). | npx -y @mcp/server-filesystem |

| mcp-server-k8s | Kubernetes cluster inspection and log reading. | go run mcp-k8s |

| mcp-server-browser | Headless chromium for web browsing/testing. | npx -y @mcp/browser |

4.0 The Agentic Workspace: IDEs vs. IADs

The “Integrated Development Environment” (IDE) is obsolete. We now work in “Integrated Agentic Environments” (IADs).

4.1 Windsurf: The “Flow” State

Windsurf (by Codeium) introduced the concept of “Cascades.”

- Deep Context Awareness: Windsurf doesn’t just look at the open file. It indexes your variable definitions, your import graph, and your recent terminal output.

- Predictive Navigation: If you change a function signature in the backend, Windsurf proactively opens the frontend file that calls it, highlighting the break before you even run the compiler.

4.2 Cline: The Open Source Orchestrator

Cline is the hero of the open-source world. It is a VS Code extension that turns the editor into an Autonomous Agent.

- The “Act” Loop: Cline doesn’t just suggest code; it runs terminal commands. It can:

npm test(Fail)- Read the error.

- Edit the file.

npm test(Pass)git commit

- MCP Integration: Cline is the most advanced MCP client. You can chain tools: “Use the GitHub MCP to find the issue, use the Postgres MCP to check the data, and then write the fix.”

5.0 Local Sovereignty: The Private Stack

For enterprises dealing with HIPAA, GDPR, or trade secrets, the cloud is not an option. 2025 delivered the “Local Intelligence” breakthrough.

5.1 DeepSeek-R2: The Open Weight Miracle

DeepSeek-R2 is an open-weights model that rivals GPT-4o but runs on consumer hardware.

- Distillation: It was trained using “Knowledge Distillation” from larger reasoning models, allowing it to “think” deeply with fewer parameters.

- Privacy: By running DeepSeek-R2 on a local Mac Studio or NVIDIA H100 cluster, companies gain “Air-Gapped Intelligence.” No data ever leaves the building.

5.2 Ollama v2.0: The Model OS

Ollama is now the standard runtime for local AI.

- Hot-Swapping: Ollama v2 keeps the “base” weights loaded in VRAM and hot-swaps “LoRA Adapters” (Low-Rank Adaptations) instantly. You can switch from a “Coding Expert” to a “Creative Writer” in 10ms.

6.0 Operationalizing Intelligence: The “Review Crisis”

The fundamental problem of 2025 is not generating code; it is verifying it. When a junior dev (or an AI agent) can generate 5,000 lines of complex React logic in 30 seconds, the Senior Architect becomes the bottleneck. We have entered the era of “Code Slop”—code that looks correct, passes unit tests, but introduces subtle architectural drift.

6.1 Qodo (formerly Codium): The “BS Detector”

Tools like Qodo are no longer “nice to haves”—they are defensive infrastructure. Their primary job is not just testing, but Hallucination Containment.

- The “Confidence Cliff”: AI agents are notoriously confident even when they are wrong. Qodo acts as the impartial auditor.

- Property-Based Fuzzing: Since we can’t trust the AI to understand edge cases, we use Qodo to “fuzz” the agent’s code—throwing millions of random inputs at the function to see where the logic breaks.

- Reality Check: In production, we see Qodo rejecting ~40% of “System 1” AI code for subtle off-by-one errors or security regressions that a tired human reviewer would have missed.

6.2 The Realistic Pipeline: “The Loop of Pain”

In the idealized demo, the Agent writes the code and the CI merges it. In reality, the pipeline is a battleground of “Agent Flailing” and “Human Fatigue.”

The 2025 Workflow (Real World):

- The Prompt (Human): The Senior Dev describes a feature to Cline.

- The “First Draft” (Agent): Cline writes the feature. It looks perfect.

- The “Phantom Dependency” (CI Failure): The build fails because the agent imported a library that doesn’t exist or used a version of a package that was deprecated in 2024.

- The “Burn Rate” Loop (Agent):

- The Agent sees the error.

- It tries to fix it. Fails.

- It tries again. Fails.

- Result: You just spent $12.00 in API credits on GPT-5 for a loop that a human could have fixed in 30 seconds.

- The “Review Bottleneck” (Human): The PR finally passes CI. It is 45 files changed. The Senior Dev opens it.

- The Problem: Reading code is harder than writing it. The Dev scans it, misses a subtle state-management bug, and approves it out of fatigue.

- Production (Reality): The feature works, but the “Persistent Memory” notes that the codebase has grown by 15% in complexity. Technical debt is now being generated automatically.

6.3 The Hidden Cost: Architectural Entropy

The danger of 2025 is not “Skynet”; it is Spaghetti Code at Scale.

- Inconsistency: Agent A (using Claude) writes Functional React. Agent B (using GPT-5) writes OOP-style Class components. The codebase becomes a schizophrenia of styles.

- Bloat: AI agents favor “adding code” over “refactoring.” They rarely delete old logic; they wrap it. Over a year, this leads to massive, unmaintainable application bloat.

🎯 Conclusion: The Architect as “The Janitor”

The “100,000 words” of this new era are not written by us; they are generated by the systems we design. And most of those words are garbage.

The Architect’s role in late 2025 has shifted from “Master Builder” to “Master Editor.” We are no longer the bottleneck on creation; we are the bottleneck on quality.

The Final Truth of the Stack:

- AI generates Quantity.

- Humans enforce Quality.

- The Stack exists to manage the conflict between the two.

Your job is no longer to write the code. Your job is to build the “immune system” (MCP, Qodo, strict Constraints) that prevents the AI from turning your clean architecture into a legacy nightmare.

Welcome to the Heavy Stack. Keep your helmet on.